Current visual detectors, though impressive within their training distribution, often fail to parse out-of-distribution scenes into their constituent entities. Recent test-time adaptation methods use auxiliary self-supervised losses to adapt the network parameters to each test example independently and have shown promising results towards generalization outside the training distribution for the task of image classification.

In our work, we find evidence that these losses are insufficient for the task of scene decomposition, without also considering architectural inductive biases. Recent slot-centric generative models attempt to decompose scenes into entities in a self-supervised manner by reconstructing pixels.

Drawing upon these two lines of work, we propose Slot-TTA, a semi-supervised slot-centric scene decomposition model that at test time is adapted per scene through gradient descent on reconstruction or cross-view synthesis objectives. We evaluate Slot-TTA across multiple input modalities, images or 3D point clouds, and show substantial out-of-distribution performance improvements against state-of-the-art supervised feed-forward detectors, and alternative test-time adaptation methods.

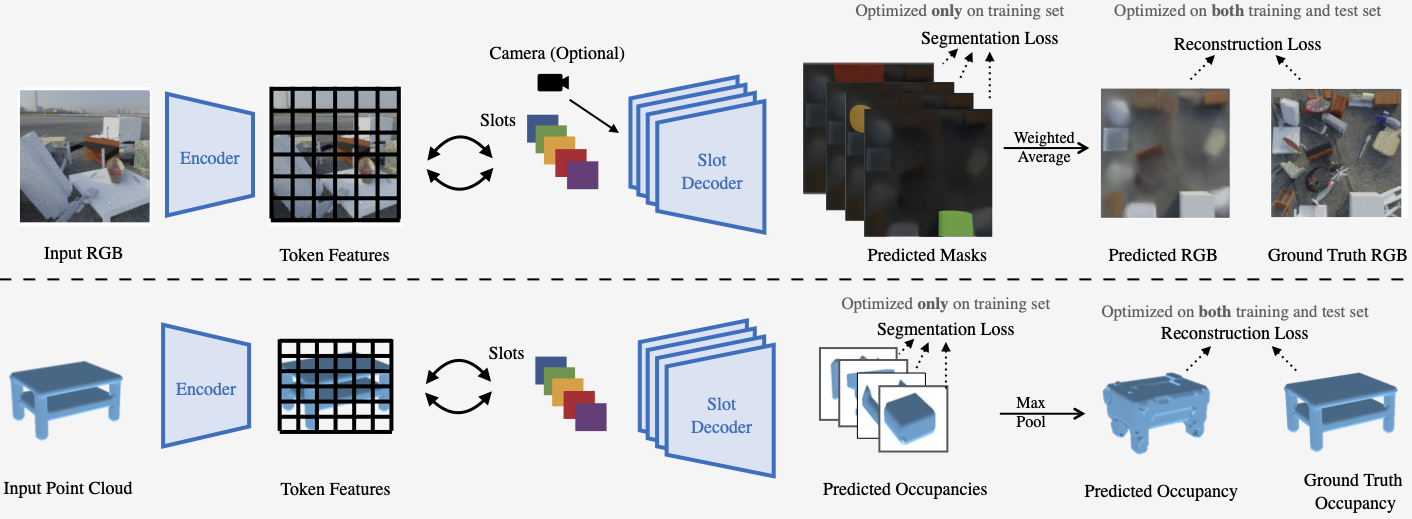

Model architecture for Slot-TTA for posed multi-view or single view RGB images (top) and 3D point clouds (bottom).

Slot-TTA maps the input (multi-view posed) RGB images or 3D point cloud to a set of token features with appropriate encoder backbones. It then maps these token features to a set of slot vectors using Slot Attention. Finally, it decodes each slot into its respective segmentation mask and RGB image or 3D point cloud. It uses weighted averaging or maxpooling to fuse renders across all slots. For RGB images, we show results for multi-view and single-view settings, where in the multi-view setting the decoder is conditioned on a target camera-viewpoint. We train Slot-TTA using reconstruction and segmentation losses. At test time, we optimize only the reconstruction loss.We visualize point cloud reconstruction and segmentation during test-time adaptation steps. As can be seen, segmentation improves when optimizing over reconstruction objective via gradient descent at test-time on a single test sample. In this setup, We train supervised using some categories of PartNet and test using novel-categories.

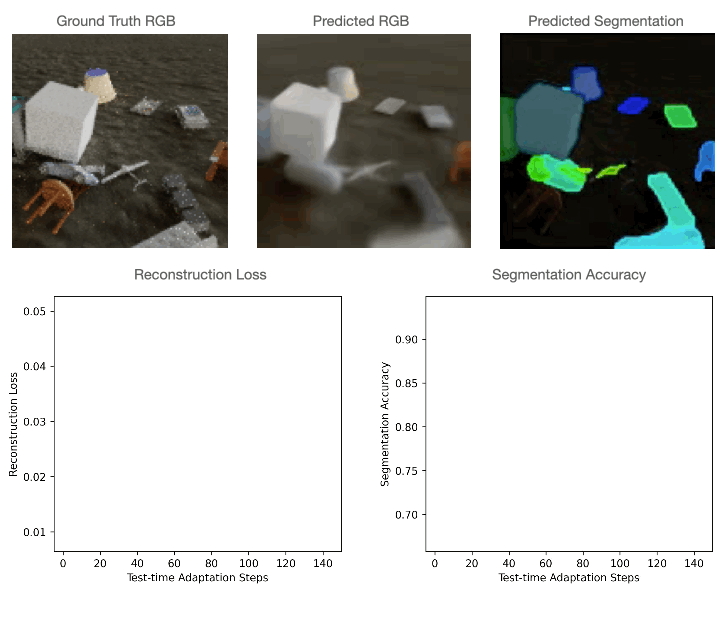

We visualize RGB reconstruction and segmentation during test-time adaptation steps. As can be seen, segmentation of the target viewpoint improves when optimizing over view synthesis objective via gradient descent at test-time on a single test scene. In this setup, We train supervised using Kubric's MSN-Easy and test using MSN-Hard.

We visualize RGB reconstruction during test-time adaptation steps. As can be seen, segmentation improves when optimizing over reconstruction objective via gradient descent at test-time on a single test sample. In this setup, We train supervised using the CLEVR dataset and test using CLEVR-TEX.

@misc{prabhudesai2022test,

title={Test-time Adaptation with Slot-Centric Models},

author={Prabhudesai, Mihir and Goyal, Anirudh and Paul, Sujoy and van Steenkiste, Sjoerd and Sajjadi, Mehdi SM and Aggarwal, Gaurav and Kipf, Thomas and Pathak, Deepak and Fragkiadaki, Katerina},

year={2203},

eprint={2203.11194},

archivePrefix={arXiv},

primaryClass={cs.CV}

}